Alternative 2 – Parallel and synchronous task execution

So, you hire 12 bartenders, and you calculate that you can serve about 360 customers an hour. The line is barely going out the door now, and revenue is looking great.

One month goes by and again, you’re almost out of business. How can that be?

It turns out that having 12 bartenders is pretty expensive. Even though revenue is high, the costs are even higher. Throwing more resources at the problem doesn’t really make the bar more efficient.

Alternative 3 – Asynchronous task execution with one bartender

So, we’re back to square one. Let’s think this through and find a smarter way of working instead of throwing more resources at the problem.

You ask your bartender whether they can start taking new orders while the beer settles so that they’re never just standing and waiting while there are customers to serve. The opening night comes and…

Wow! On a busy night where the bartender works non-stop for a few hours, you calculate that they now only use just over 20 seconds on an order. You’ve basically eliminated all the waiting. Your theoretical throughput is now 240 beers per hour. If you add one more bartender, you’ll have higher throughput than you did while having 12 bartenders.

However, you realize that you didn’t actually accomplish 240 beers an hour, since orders come somewhat erratically and not evenly spaced over time. Sometimes, the bartender is busy with a new order, preventing them from topping up and serving beers that are finished almost immediately. In real life, the throughput is only 180 beers an hour.

Still, two bartenders could serve 360 beers an hour this way, the same amount that you served while employing 12 bartenders.

This is good, but you ask yourself whether you can do even better.

Alternative 4 – Parallel and asynchronous task execution with two bartenders

What if you hire two bartenders, and ask them to do just what we described in Alternative 3, but with one change: you allow them to steal each other’s tasks, so bartender 1 can start pouring and set the beer down to settle, and bartender 2 can top it up and serve it if bartender 1 is busy pouring a new order at that time? This way, it is only rarely that both bartenders are busy at the same time as one of the beers-in-progress becomes ready to get topped up and served. Almost all orders are finished and served in the shortest amount of time possible, letting customers leave the bar with their beer faster and giving space to customers who want to make a new order.

Now, this way, you can increase throughput even further. You still won’t reach the theoretical maximum, but you’ll get very close. On the opening night, you realize that the bartenders now process 230 orders an hour each, giving a total throughput of 460 beers an hour.

Revenue looks good, customers are happy, costs are kept at a minimum, and you’re one happy manager of the weirdest bar on earth (an extremely efficient bar, though).

The key takeaway

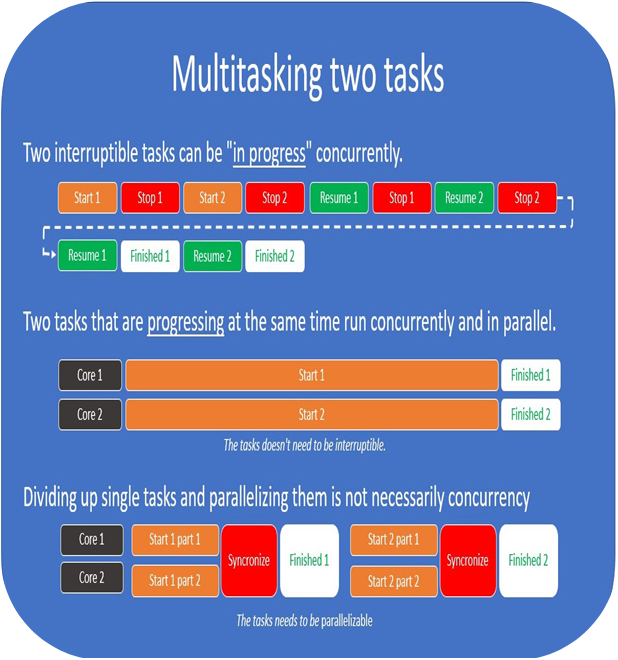

Concurrency is about working smarter. Parallelism is a way of throwing more resources at the problem.