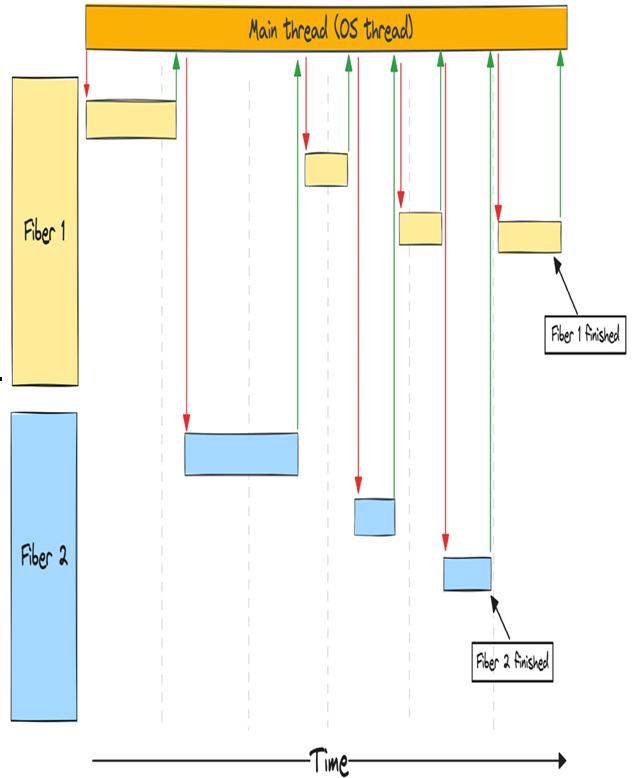

Pre-emption points can be thought of as inserting code that calls into the scheduler and asks it if it wishes to pre-empt the task. These points can be inserted by the compiler or the library you use before every new function call for example.

Furthermore, you need compiler support to make the most out of it. Languages that have metaprogramming abilities (such as macros) can emulate much of the same, but this will still not be as seamless as it will when the compiler is aware of these special async tasks.

Debugging is another area where care must be taken when implementing futures/promises. Since the code is re-written as state machines (or generators), you won’t have the same stack traces as you do with normal functions. Usually, you can assume that the caller of a function is what precedes it both in the stack and in the program flow. For futures and promises, it might be the runtime that calls the function that progresses the state machine, so there might not be a good backtrace you can use to see what happened before calling the function that failed. There are ways to work around this, but most of them will incur some overhead.

Advantages

• You can write code and model programs the same way you normally would

• No context switching

• It can be implemented in a very memory-efficient way

• It’s easy to implement for various platforms

Drawbacks

• Pre-emption can be hard, or impossible, to fully implement, as the tasks can’t be stopped in the middle of a stack frame

• It needs compiler support to leverage its full advantages

• Debugging can be difficult both due to the non-sequential program flow and the limitations on the information you get from the backtraces.

Summary

You’re still here? That’s excellent! Good job on getting through all that background information. I know going through text that describes abstractions and code can be pretty daunting, but I hope you see why it’s so valuable for us to go through these higher-level topics now at the start of the book. We’ll get to the examples soon. I promise!

In this chapter, we went through a lot of information on how we can model and handle asynchronous operations in programming languages by using both OS-provided threads and abstractions provided by a programming language or a library. While it’s not an extensive list, we covered some of the most popular and widely used technologies while discussing their advantages and drawbacks.

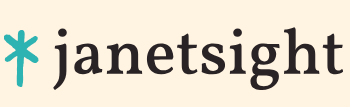

We spent quite some time going in-depth on threads, coroutines, fibers, green threads, and callbacks, so you should have a pretty good idea of what they are and how they’re different from each other.

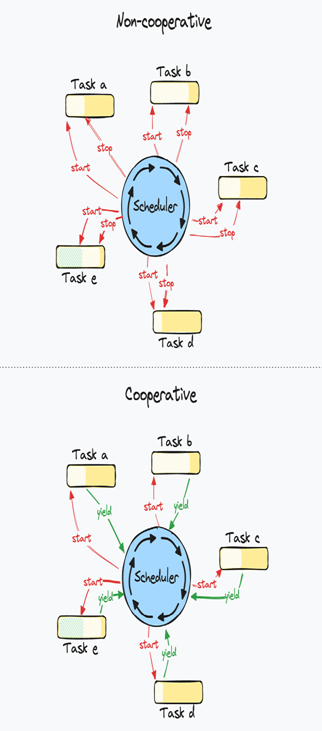

The next chapter will go into detail about how we do system calls and create cross-platform abstractions and what OS-backed event queues such as Epoll, Kqueue, and IOCP really are and why they’re fundamental to most async runtimes you’ll encounter out in the wild.